In my last post, I explained what TINA-TI is and introduced how to use a transient simulation to check a circuit’s DC operating condition. Figure 1 shows the results of this simulation.

Figure 1: Transient simulation results using the LMH5401 TINA-TI reference design

As an applications engineer, this simulation is one of my favorites, because it tells me if the:

- Power supplies are the right voltage.

- Input voltage is in the correct range.

- Common mode voltage is set right.

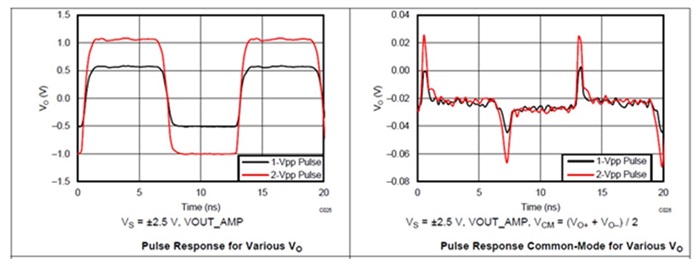

When these three conditions are set correctly, the output signal will be uniform and not distorted. As shown in Figure 1 above, we can see that the VIN signal is centered on the proper input common mode voltage of 2.5V. The output voltage is the proper amplitude as well. So these conditions look good, but adding another probe, as shown in Figure 2, will help to confirm that everything is set up right. More on that later.

Figure 1 also shows the PD chart on the top row. The power pin is toggled, and the amplifier is turned on and off. Why is the signal obvious when the amplifier is off? And, why is the input signal impacted so much?

The output signal that is visible during the “off” time is the feed-through caused by the feedback resistor network. This is why nearly all fully differential amplifiers (FDAs) are not suitable for multiplexing; they are never really “off.”

The input signal is changing due to the change of input termination. The 8-GHz, ultra-wideband LMH5401, like most FDAs, has active termination when driven from a single-ended source. This means that the input impedance, as seen by the driving source, is set by the amplifier’s closed loop gain. Since the amplifier does not operate during power down, the loop gain is gone, and all that is left is the passive feedback network. As a side note, this active termination allows much lower input noise.

Now let’s take a look at a transient response. But before we can do that, we should add some more probe points.

The VOUT waveform is OK for starters, but it has no DC information (it is differential only). By adding a probe on one of the amplifier output pins that is referenced to ground, we can see the DC level of the amplifier outputs. Since the output signals on the amplifier output pins are designed to be symmetrical, we only need one probe. This is shown in Figure 2 as the VF1 probe, which is ground referenced.

In Figure 2, I also moved the VIN probe to look at the amplifier input pin (INN). This will enable us to make sure the amplifier input range is valid. Notice that this probe is also ground referenced.

Figure 2: LMH5401 schematic with additional probes.

In the results shown in Figure 3, the voltage range of VF1 is from 1.97V to 3.0V. This is within the datasheet swing values of 3.9V and 1.1V. Likewise, the input voltage is between 2.31V and 2.65V. This compares to the valid range of 0V to 3.8V.

Figure 3: LMH5401 transient response with additional probe information shown

Now that we’ve verified that the amplifier operating voltages are well within the range of the amplifier, what else should we simulate?

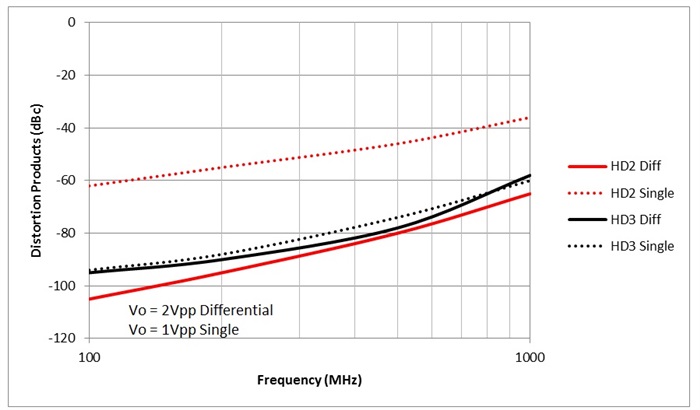

The next thing I usually check is the AC response. The AC transfer characteristic simulation does not require any circuit changes. It substitutes a small-signal, swept source for VG1 automatically. Figure 4 shows these results.

Figure 4: LMH5401 AC, small-signal response

Everything here looks good. The PD chart is not relevant, so we can ignore it. VF1 (-1.03dB) is the response of one output, and VOUT is the differential response (5.15dB). The difference between VF1 and VOUT is very close to the expected 6.02dB (remember, we are looking at voltages, not power).

On the VIN chart, note how the input voltage increases at high frequency. This is because the loop gain of the amplifier is reduced, and the active termination, which I mentioned above, becomes less effective. The amplifier gain increases along with the input impedance, which causes less loss in the source resistance (R1). The only drawback to this behavior is that the amplifier noise increases. Just run the noise analysis in TINA-TI to prove this.

While there are many simulation tools out there, be sure to pick one that will allow you to quickly and easily find the data you’re looking for, like TINA-TI.

The high-speed amplifier applications team has fielded a lot of questions about how to use TINA-TI in the High Speed Amplifier Forum. Check out our answers, and if you have a question that hasn’t been answered, I hope you’ll let us know by posting. We’re here to help.

Additional resources:

- Download TINA-TI to start working.

- Read other blog posts about working with TINA-TI, including this one about questions you may be too afraid to ask.